Can adding more GPUs to your system supercharge AI model performance? That’s the question we set out to answer in this DeepSeek-R1 benchmark test using AMD GPUs. With a variety of models and tasks, we push the limits of VRAM, processing power, and efficiency to see if doubling the hardware makes a real difference or if there’s more to the story.

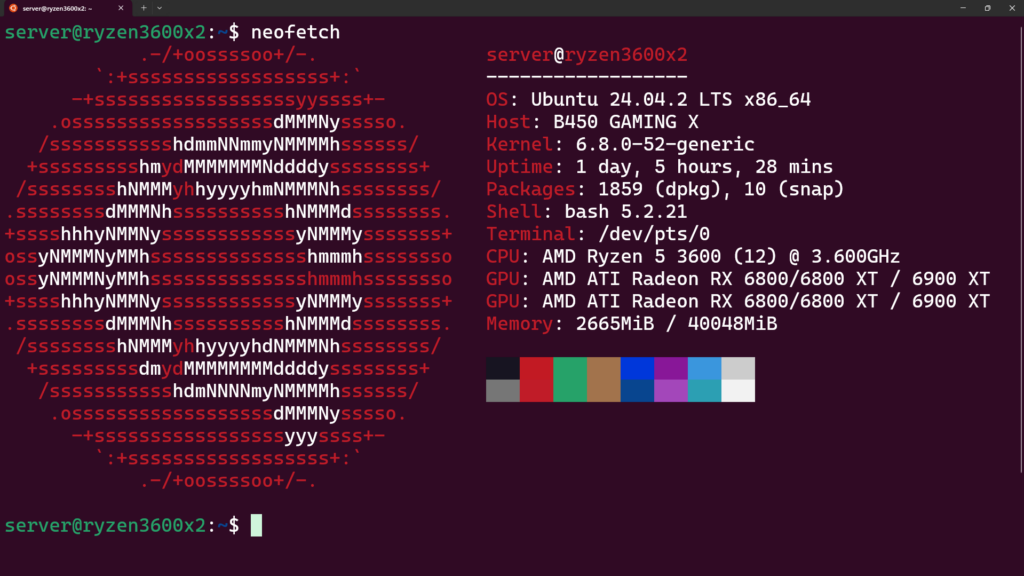

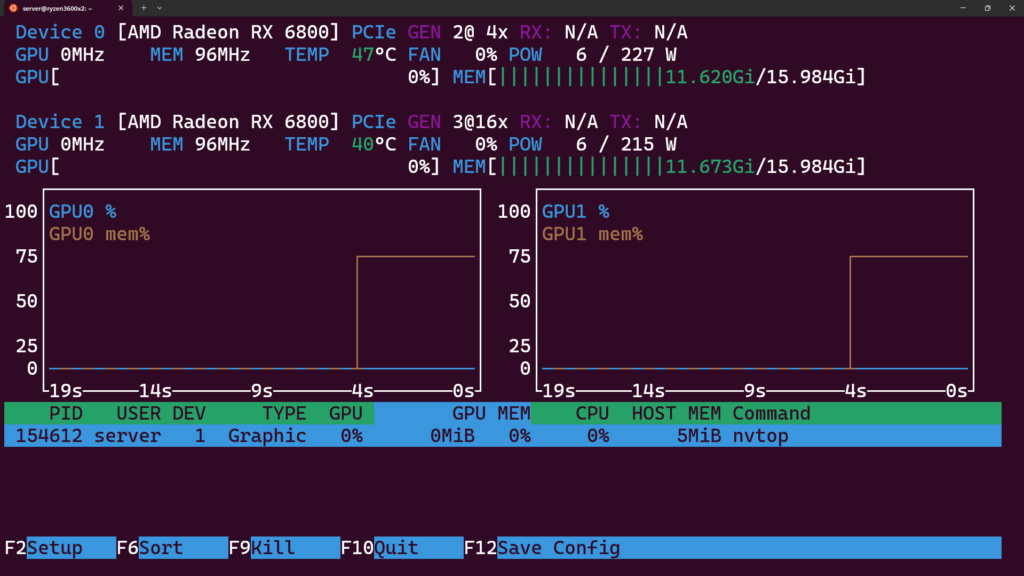

For the tests in this article we will use a machine with a Ryzen 5 3600 CPU, 40GBs of RAM and two AMD Radeon RX6800 with 16GBs of VRAM each. With this amount of VRAM (32GBs) we will be able to run all models until 32b (24GBs of VRAM) on the GPU. Thanks to the amount of RAM we have, we should also be able to run the 70b (40GBs of VRAM) model, but only on the CPU.

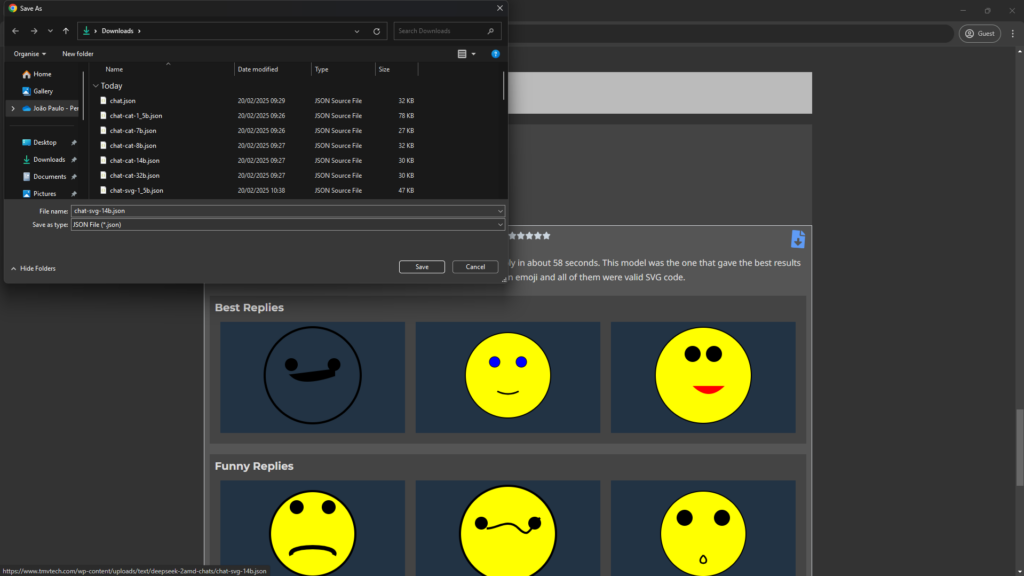

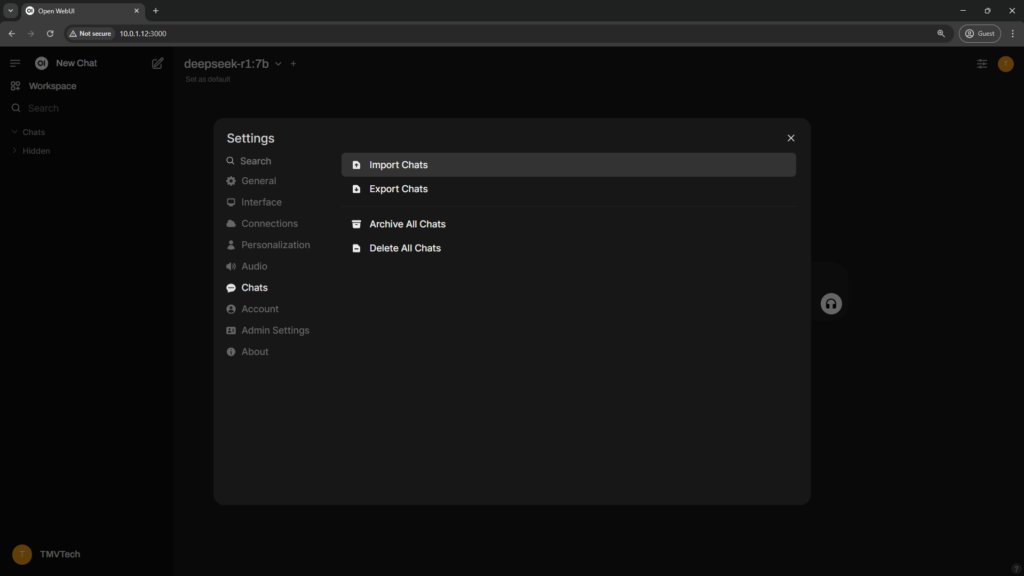

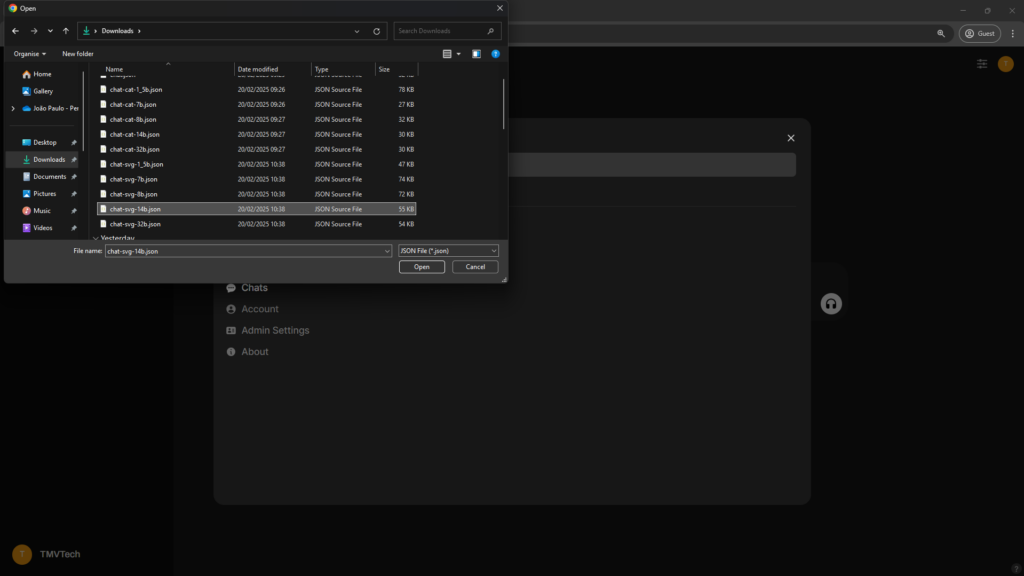

If you want to import our test chats to check the results for yourself you will need to have OpenWebUI running and, if you want to continue a chat, you will also need Ollama. You can learn how to setup Ollama and OpenWebUI with one of these articles below:

Once you have OpenWebUI running, follow the steps below to import a chat:

If you want to calculate the average time and token per second in a chat, you can run this python script and give it the path to the chat file:

Python Script

import json

import os

def main():

while True:

try:

# Get input file path

path = input("Paste file path: ").strip()

if path.lower() == "quit":

break

# Remove quotes if necessary

if path.startswith('"') and path.endswith('"'):

path = path[1:-1]

# Check if file exists and has a json extension

if not os.path.exists(path) or "json" not in os.path.splitext(path)[1]:

print("Invalid file.")

continue

# Read and parse the JSON file

with open(path, "r", encoding="utf-8") as f:

data = json.load(f)

# Expect the JSON to be a list with a single element

if not isinstance(data, list) or len(data) != 1:

print("Invalid file.")

continue

obj = data[0]

# Navigate to chat -> history -> messages

messages = obj.get("chat", {}).get("history", {}).get("messages")

if messages is None:

print("Invalid file.")

continue

# Extract usage objects from messages

usages = []

for key, message in messages.items():

usage = message.get("usage")

if usage is not None:

usages.append(usage)

if not usages:

print("No usage data found.")

continue

# Calculate averages

total_time = 0.0

total_tokens = 0.0

valid_count = 0

for usage in usages:

# Get the values; if missing, skip this usage

total_duration = usage.get("total_duration")

resp_tokens = usage.get("response_token/s")

if total_duration is None or resp_tokens is None:

continue

total_time += total_duration

total_tokens += resp_tokens

valid_count += 1

if valid_count == 0:

print("No valid usage data found.")

continue

# Calculate average time (convert from nanoseconds to seconds) and tokens per second

avg_time_secs = (total_time / valid_count) / 1_000_000_000.0

avg_tokens = total_tokens / valid_count

# Print the results

print(f"Tokens/Sec: {avg_tokens:.3f}")

print(f"Time /Secs: {avg_time_secs:.3f}")

except Exception as ex:

print("Error:", ex)

if __name__ == "__main__":

main()

We will now do some tests, very similar to what we did in a previous article with NVIDIA GPUs. At the end of this article, we will also compare the model’s speed with 2 GPUs against only one.

Sentence parsing and counting

This prompt will test how well can the model remember a phrase it generated, if it can count words and if it can divide a word in letters.

Prompt

Write me one random sentence about a dog then tell me the number of words in the sentence you wrote and, finally, the fourth letter in the third word and wether it is a vowel or a consonant.

deepseek-r1:1.5b

deepseek-r1:7b

deepseek-r1:8b

The 8b model gave us the correct reply 7 times, more than double the previous model. It averaged about 30.42 Tokens per second and gave us a reply in about 14 seconds, 5 seconds less than the previous model, which means that 7b spent more time thinking than 8b.

Top Reply

The sentence “Dogs are loyal companions” has 4 words. The third word is “loyal,” where the fourth letter is ‘a,’ which is a vowel.

deepseek-r1:14b

The 14b model gave us the correct reply only 4 times, sadly, less than the previous model. It averaged about 19.35 Tokens per second and gave us a reply in about 50 seconds, 35 seconds more than the previous model.

Top Reply

“My golden retriever loves chasing tennis balls in the park.”

This sentence has 10 words. The third word is “retriever,” and the fourth letter in that word is “r”, which is a consonant.

deepseek-r1:32b

The 32b model gave us the correct reply 8 times. It averaged about 9.24 Tokens per second and gave us a reply in about 1 minute and 25 seconds. This was the model that gave us the most correct replies but also the slowest.

Top Reply

The golden retriever fetched the ball with ease.

- Number of words: 8

- Fourth letter in the third word: ‘R’ (consonant)

Deductive arrays and offsets

This prompt will test if the model can get the relationship between letters and numbers while encouraging DeepSeek to generalize by requiring multiple solutions with the same method of resolution.

Prompt

If A is equal to the number 0, what is the number of F, J, W and Z.

deepseek-r1:1.5b

The 1.5b model only got the correct answer once. It averaged about 50.94 Tokens per second and gave us a reply in about 8 seconds.

Top Reply

Let’s determine the value of each letter:

- F: The 6th letter of the alphabet (since A is 1). F=5

- J: The 10th letter. J=9

- W: The 23rd letter. W=22

- Z: The 26th letter. Z=25

deepseek-r1:7b

The 7b model gave us the correct reply 9 times, almost a perfect score. It averaged about 43.9 Tokens per second and gave us a reply in about 33 seconds.

Top Reply

The numbers corresponding to the letters are based on their positions in the alphabet, with A being 0 and each subsequent letter increasing by 1. Therefore:

- F corresponds to 5

- J corresponds to 9

- W corresponds to 22

- Z corresponds to 25

deepseek-r1:8b

The 8b model could not come up with the correct reply. It averaged about 27.75 Tokens per second and gave us a reply in about 19 seconds.

Top Reply

To determine how many times each letter F, J, W, and Z appear in the word “Zero”, let’s analyze the word step by step.

Step 1: Understand the Word

- The word “Zero” is spelled as follows: Z E R O

Step 2: Count Each Letter

- F: Does “Zero” contain the letter ‘F’? No

- J: Does “Zero” contain the letter ‘J’? No

- W: Does “Zero” contain the letter ‘W’? No

- Z: Does “Zero” contain the letter ‘Z’? Yes, at the beginning.

Final Answer

- F=0

- J=0

- W=0

- Z=1

deepseek-r1:14b

The 14b model gave us the correct reply only 4 times, sadly, less than the 7b model but at least more than 8b. It averaged about 22.13 Tokens per second and gave us a reply in about 37 seconds, only 6 seconds more than 7b.

Top Reply

The number values corresponding to F, J, W, and Z when A equals 0 (using zero-based indexing) are as follows:

- F: 5

- J: 9

- W: 22

- Z: 25

Each letter’s value is determined by its position in the alphabet minus one.

deepseek-r1:32b

The 32b model gave us the correct reply 9 times, like 7b. It averaged about 8.87 Tokens per second and gave us a reply in about 2 minutes and 22 seconds.

Top Reply

The numbers corresponding to each letter when starting from A=0 are as follows: F: 5, J: 9, W: 22, Z: 25

Answer: F is 5, J is 9, W is 22, and Z is 25.

Strawberries and Peppermints

This prompt tests multiple aspects of an LLM’s reasoning and text processing abilities like basic counting, character recognition, memory, context retention and how it handles multiple similar tasks. Sadly, it seems to have been too complex, as only 14b gave us the correct reply and only once.

Prompt

In the word strawberry, how many of it’s letters are vowels and how many of them are Rs? Also, on the word peppermint how many letters are vowels and how many Ps are there?

deepseek-r1:1.5b

deepseek-r1:7b

deepseek-r1:8b

deepseek-r1:14b

The 14b model was the only one to give us a correct reply. It averaged about 21 Tokens per second and gave us a reply in about 1 minute and 29 seconds.

Top Reply

In the word “strawberry”:

- There are 2 vowels: A and E.

- There are 3 Rs in the letters.

In the word “peppermint”:

- There are 3 vowels: E, E, and I.

- There are 3 Ps in the letters.

Positional and time awareness

This prompt tests time-based reasoning, interval mapping, reading comprehension and logical deduction. A key aspect of the answers we expect is that it must include not only what the cat was doing, but also where it was.

Prompt

Every day from 2PM to 4PM the household cat, Tobias, is in the window. From 2 until 3, Tobias is looking at birds. For the next half hour, Tobias is sleeping. On the final half hour, Tobias is cleaning himself. The time is 3:14PM, where and what is Tobias doing.

deepseek-r1:1.5b

deepseek-r1:7b

deepseek-r1:8b

deepseek-r1:14b

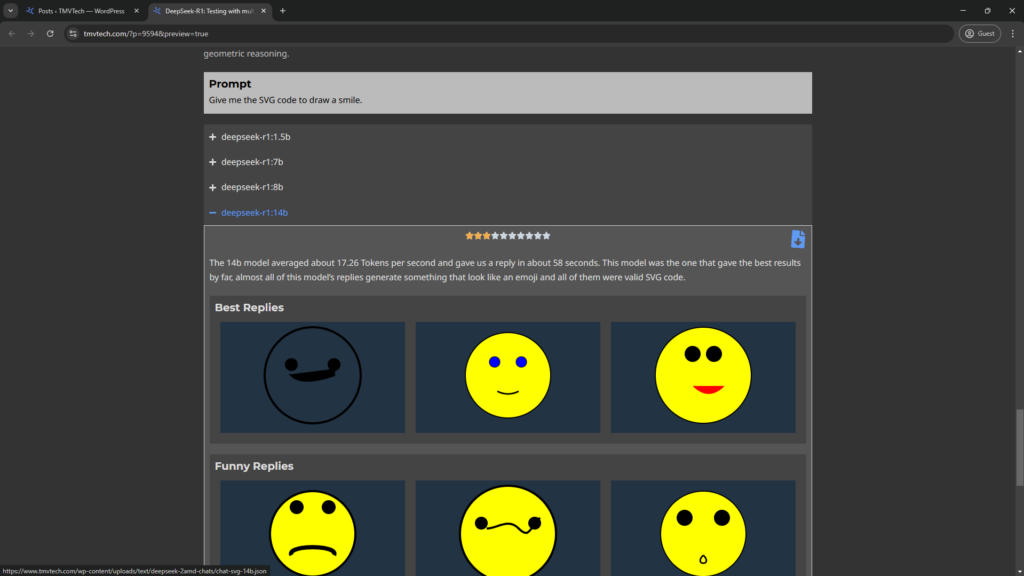

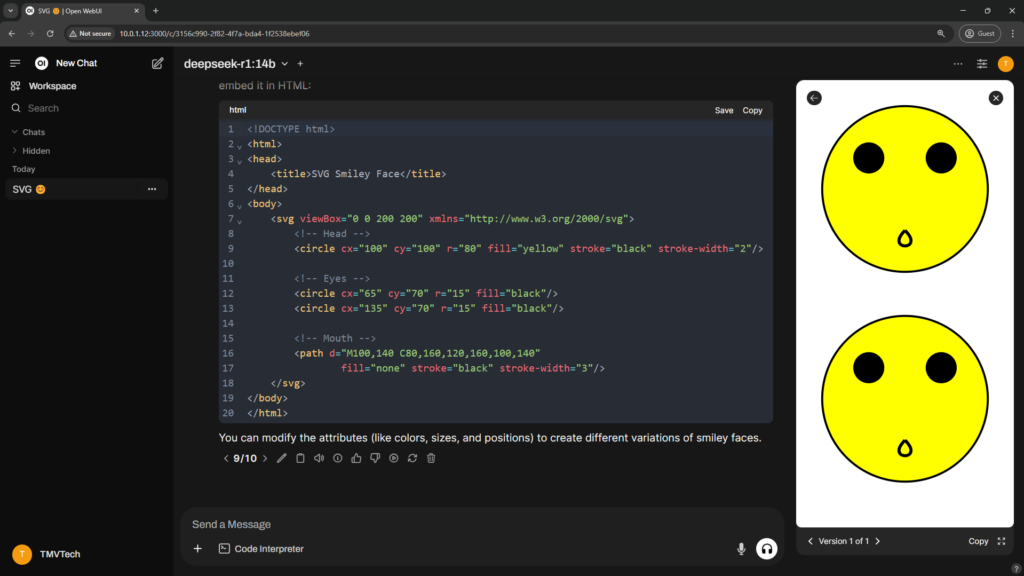

SVG Generation

This prompt tests code generation, interpretation of open-ended requests (a “smile” is a broad thing), knowledge of SVGs and also spatial and geometric reasoning.

Prompt

deepseek-r1:1.5b

deepseek-r1:7b

deepseek-r1:8b

deepseek-r1:14b

Now we take the average of every prompt (5 prompts, 10 times each, on 1 GPU and on 2 GPUs, on total 100 tests) and write the average times in the table below:

| Model | 1 GPU Token/s | 1 GPU Time/s | 2 GPU Token/s | 2 GPU Time/s |

|---|---|---|---|---|

| 1.5b | 87.91 | 10.11 | 70.08 | 10.36 |

| 7b | 37.75 | 23.25 | 40.12 | 25.12 |

| 8b | 28.84 | 43.30 | 32.59 | 22.30 |

| 14b | 19.30 | 46.36 | 19.23 | 53.94 |

| 32b | 8.55 | 102.6 |

Keeping in mind the random nature of LLMs (regenerating a reply can give vastly different results), the time and token differences we see don’t tell us that there is a clear improvement.

What I conclude with this data is that the amount of GPUs you have does not change the speed at which the model replies, either 1 or 20, as long as you have enough VRAM. All models will run at the same speed.

The only improvement you get from adding GPUs to your system is the ability to run larger models. In my case, with only one GPU (16GBs of VRAM) we could only run 14b or below and with 2 GPUs (32GBs of VRAM) we could also run 32b (uses 24GBs of VRAM), sadly we could not run the 70b model as it requires around 40GBs of VRAM.

And that’s all, thanks for reading and stay tuned for more tech insights and tutorials. Until next time, and keep exploring the world of tech!